Migrate data into mongodb

Posted on January 4th, 2015

Whilst migrating some websites, I wondered how I would handle moving the existing data from mysql to mongodb. (My existing code never dealt with this situation, and would read / write data directly to mongodb.)

So a quick google gave me a few options.

* mongify – from http://mongify.com/

* mongoimport – comes with mongodb when installed (should be in your path after installing mongodb, in the bin folder e.g. /usr/local/mongodb/bin/mongoimport)

* custom script

Since I had only a very small import, installing mongify seemed like overkill, so I reserved this approach for later, Also, I wanted to have a solution that didn’t have to connect to both mongodb AND mysql. It sounds crazy, but imagine only having mysql on the source, and only having mongodb on the destination – though see my thoughts at the end. Feel free to check it out if you have more serious migrations planned!

I wanted something more like the mysql command line tools, where the mysql command can import a file exported by the mysqldump utility, and mongoimport sounded like a good start. It can import data from json, tsv, csv files created by the mongoexport utility. Trouble is, I wasn’t exporting form mongodb, and a mysqldump file would be no use here.

If I could just find some way to export my data as json, I could then try passing it to mongo. Luckily for me, I had a RESTful API sat in front of the MySQL database, so it was a trivial matter to export the whole data set as a json array using a simple HTTP GET request 😀

The data set I exported was a single table (a really simple example!) containing a list of wines, which I saved as “wines.json”. (If you wish to replicate, setup the wine cellar example from https://github.com/ccoenraets/wine-cellar-php and use the Slim API to get the data.) Unfortunately, mongoimport didnt seem to like this file for some reason.

So that left me with the option of a custom import script, so I hacked up the following file. It is just a quick and dirty php script to read each record (document in mongodb parlance) from the array in the wines.json file, and insert it into a collection in mongo.

[code language=”php”]

<?php

$wines = json_decode(file_get_contents(‘wines.json’));

// var_dump($wines);

$mongo = new MongoGateway();

foreach ($wines as $wine) {

$mongo->insertDocument((array) $wine);

}

exit;

Class MongoGateway {

private $conn;

private $db;

public function __construct() {

try {

// Connect to MongoDB

$this->conn = new Mongo(‘localhost’);

// connect to test database

$this->db = $this->conn->test;

}

catch ( MongoConnectionException $e )

{

// if there was an error, we catch and display the problem here

echo $e->getMessage();

die(‘error’);

}

catch ( MongoException $e )

{

echo $e->getMessage();

die(‘error’);

}

}

public function __destruct() {

// close connection to MongoDB

$this->conn->close();

}

public function insertDocument(array $document) {

try {

// a new products collection object

$collection = $this->db->products;

// insert the array

$collection->insert( $document );

echo ‘Document inserted with ID: ‘ . $document[‘_id’] . "\n";

}

catch ( MongoException $e )

{

echo $e->getMessage();

die(‘error’);

}

}

}

[/code]

Thoughts:

Perhaps I could acheived this by installing mongify twice, once to perform the export on the source (mysql) db, copying the resulting file over, and then using mongify again to import that file into the destination db.

I also discovered that using mongoimport/export also risked losing some fidelity of the native bsone types used in mongo internally, which don’t have representations in json (probably not a real risk for me, but you might want to look at mongodump/restore or db.collection.clone if this sounds like it might affect you)

I’m sure there are plenty of ways to clean it up but I only ever expected to use it to migrate a couple of schemas so didn’t spend much time on it. I’m posting it here in case anyone can use it for inspiration (No warranties! Use at your own risk etc) as it served it purpose well enough for me.

It borrows heavily from the examples given at http://www.phpro.org/tutorials/Introduction-To-MongoDB-And-PHP-Tutorial.html

PHP-free websites, using varnish and golang

Posted on January 1st, 2015

Websites without php are not a new thing, but if you have been in a habit of coding sites using a LAMP stack for a while, then it can feel very strange moving back out from the familiar comfort zone of Apache and PHP.

Recently I challenged myself to do exactly that – abolish my old php habits and rewrite all my sites using go. I was itching to rewrite them anyway, to make them more RESTful as I went, so I figured why not try and put them into go at the same time?

This guide will help you create a static website from your existing files, served by a go webserver on port 3000, which is made available to visitors through a varnish caching proxy server on port 80, which passes through requests to the backend port 3000. The go webserver can also be extended to provide more complex functionality later.

Preparation – install go lang

Turns out that golang doesn’t have a binary distribution for RHEL/Centos distros – and it doesn’t compile nicely (some tests dont succeed) so you have to use make.bash instead of all.bash to get a ‘go‘ binary. See this post if you want more details: http://dave.cheney.net/2013/06/18/how-to-install-go-1-1-on-centos-5

Ok, so to start with, how do you setup go to run a basic, static site – composed of nothing more than html, css, and images? Luckily I had such a site, so it was a simple matter of turning off apache and mysql (they weren’t being used for anything else on this server) and then creating my new structure.

If you have used plesk or any other control panel, you may find your website docroot in a folder location such as /var/www/domainname/httpdocs

So, I created a directory (as root) /goprojects/domainname/ and in here I can create my go program. I also created a/goprojects/domainname/public/ and this is where all my html, css and images will reside.

Next I `export GOPATH=/goprojects/` and am almost done (I need to use the full path to the ‘go‘ command, but later I will edit my bash profile so it is in my path or setup an alias.)

I am going to use go-martini and create a server.go file in /goprojects/domainname/ as per the readme at https://github.com/go-martini/martini (see step 1 of 2 below)

This is a fully fledged RESTful implementation in go, but we don’t need to specify any routes now – if an asset exists in the public/ folder we created, it will simply serve it. Simples. Yes, we could use a simpler http server class, but I’m planning on using this package for the more complex sites and I like a little consistency.

For more info on building RESTful sites in go, try going through the martini docs or read walkthroughs like http://thenewstack.io/make-a-restful-json-api-go/ – its being the scope of this quick intro

Step 1 of 2

create the server.go as follows

package main

import "github.com/go-martini/martini"

func main() {

m := martini.Classic()

m.Get("/", func() string {

return "Hello world!"

})

m.Run()

}

Then install the Martini package (go 1.1 or greater is required):

go get github.com/go-martini/martini

Then run your server:

go run server.go

You will now have a Martini webserver running on localhost:3000.

Step 2 of 2

Install varnish using your favourite package manager, e.g. `yum install varnish`. make sure it isn’t running yet e.g. `service varnish status|stop`

By default this sits on a high port (6081/6082) and reverse proxies to localhost port 80. This is ok for testing, but you may need to edit the /etc/sysconfig/varnish file now to change the DAEMON_OPTS, to change the 6081 and make varnish listen on port 80 (where previously apache would have been) – the admin port 6082 can be left or changed to suit your security requirements

We then change the default.vcl file and tell it to use localhost port 3000 as the backend (our go webserver), save and restart.

And you’re done!

BOOTP server

Posted on May 10th, 2009

Setting up a BOOTP / TFTP server sounds tricky, but in reality it is marvellously simple. I used it to install IRIX over the network,on my SGI’s.

In this example I shared IRIX 5.3 from my Origin 200 running IRIX 6.5.xx to my Indigo R3000. If you are struggling with SCSI bus reset errors from that old cdrom drive, this is a much simpler route to take.

on the server:

- /etc/hosts – not required initially, but lets you address the client by hostname from the server.

- /etc/ethers – specify the mac address of the client(s)

08:00:69:c0:ff:ee iris

- /etc/bootptab – this specifies the hostname, IP, (mac?) and the directory to boot.

iris 1 08:00:69:c0:ff:ee 192.168.1.118 /cds/irix53/stand/sash.IP12 - /etc/inetd.conf – here you enable the bootp and tftpd services. It is advised that you use the -s switch to specify which directory(ies) the user can see, otherwise they will have access to all world readable ones (not so bad on a closed network, still better to specify though).

bootp dgram udp wait root /usr/etc/bootp bootp

tftp dgram udp wait guest /usr/etc/tftpd tftpd -s /cds/irix53 - finish with this command: /etc/killall -HUP inetd

on the client

enter the command monitor by selecting option 5, and a quick hinv -v to double check all the hardware is recognised may avoid confusion later on.

setenv netaddr 192.168.1.118

setenv notape 1

boot -f bootp()192.168.1.9:/path/to/diskimage/stand/fx.IP12 –x

now repartition / format the drive as necessary (I had to do this a couple of times, it appeared to keep the old installation the first time, and the second time, the amount of freespace was well below the 2GB capacity, which left me with 2 failed installations, but was successful on the third attempt – hint: during the inst phase, I selected the admin commands and looked for the mkfs option. Even though the miniroot was copied to the drive, I wiped it clean – it recovered from the shock by offering to restart the interrupted distribution.

Right, now you’ve hopefully got a clean drive – restart and

setenv netaddr 192.168.1.118

setenv notape 1

boot -f bootp()192.168.111.9:/path/to/diskimage/stand/sash.IP12

install -n

for some reasoon, this didnt work too well for me, it kept looking for /dev/tape and I couldnt trick it by setenv tapedevice /path/to/diskimage =(

in this case, just select option 2, install the os.

are you using remote tape [y/n] : n

enter the hostname:directory – 192.168.1.119:/path/to/diskimage/dist

once you have the miniroot, and inst running, you should be able to select additional distributions by setting the ‘from’ to /path/to/disk2image/dist, as appropriate.

HINTS:

This assumes the server is IP 192.168.1.119, and the client is 192.168.1.118

It also assumes you have copied the IRIX 5.3 disc from /CDROM to /cds/irix53/ on the server.

BOOTP wont work across a router, but if you can NFS mount the files across the router, you could establish a nearby BOOTP server on the local segment.

In order to edit the files, especially on a text console, a quick ‘man vi’ should help

serial port settings are often 9600-8n1 no flow control, see ‘man serial’

LINKS:

securing IRIX (more 6.5.xx specific, but still useful)

http://www.blacksheepnetworks.com/security/resources/IRIX_65.html

http://sgistuff.g-lenerz.de/tips/security.php

http://www.siliconbunny.com/irix-security/

Switch to a Linux Terminal Server Project network environment

Posted on April 27th, 2009

Get your old computers working as UNIX terminals! Setup a Linux Terminal Server with LTSP on Ubuntu, and use it from a remote workstation!

Im sick of losing machines, and having to reinstall from scratch, so if all goes well on this project, I will be able to run my blade server without even thinkiing about how to set each one up, beyond configuring each blade to boot from the Lan instead of the local hard drive, and instead concentrate on maintaining just the one central server.

Before you cry Single Point Of Failure – and it is, my comeback is that instead of having to make each server resilient, I only have to harden the one, saving time effort, and computer resources. Backups are essential, so I will have a remote machine rsync essential data on a CRON schedule. I will also improve the resilience of the server itself. This can be accomplished by having a couple of spare ethernet interfaces, a bit of software RAID (mirroring the partitions) and optionally a redundant hot/warm spare or even offline server knocking about will do for my purposes =)

To get an LTSP server running on an existing system running Ubuntu, Kubuntu, Xubuntu (which has an LTSP install option) or even Edubuntu, which sets up LTSP by default, you will need to set up a static Ethernet interface where you will attach the thin clients, install two packages and run a single command.

On the server (it can be a desktop, but for this scenario lets call it the server) configure your static / spare interface for the thin clients to have

the IP 192.168.1.1, then follow the instructions below.

sudo apt-get install ltsp-server-standalone openssh-server

Create your Thin Client environment on the server with.

sudo ltsp-build-client

After that, you will be able to boot your first thin client. Note that

if you want to use another IP than the above, you need to edit the

/etc/ltsp/dhcpd.conf

file to match the IP values and restart the dhcp server. If the IP changes (shame on you for not using IP aliases with IP/MAC address takeover tut tut) after you have done the initial setup, run

sudo ltsp-update-sshkeys

to make the ssh server aware of the change.

nb The ltsp-utils package from universe (for dapper) is for a different ltsp version, installing them together will break, so I gather. Maybe worth avoiding that, unless you have a test machine you can experiment on.

Vyatta – open source router and firewall.

Posted on April 27th, 2009

The Vyatta open-source router firewall – Welcome to the Dawn of Open-Source Networking!

If your network is growing, and you need to improve your networking skills, so you can convince your boss to commit sums of money on an expensive hardware solution – e.g. Cisco gear – try installing Vyatta linux natively onto a spare old pc with some network cards in, or use a vmware image.

Then you can practice setting up your network, however you please, all day long! =)

Apple OSX admin tips, running webmin, usermin and openSSL

Posted on April 27th, 2009

These are some notes I took whilst enabling remote https:// administration of my Mac running OSX. Webmin provides a nice web gui for administration of your server, but you would probably want to restrict access to localhost, a few known and trusted hosts, or IPs on the local lan subnet for security. In addition, using OpenSSL makes this a better solution, if you prefer a gui console or do not have access to ssh / command line. Newer versions will always come out, please check – and note that this information can only serve as a ‘rough guide’.

Some things you might find useful before we begin:

—-

Use NetInfo manager, authenticate and enable root user

http://forums.ionmac.com/lofiversion/index.php/t309.html

use

$ defaults write com.apple.finder ShowAllFiles -boolean YES

(instead of $ defaults write com.apple.finder ShowAllFiles True )

this is also cool

$ defaults write “Apple Global Domain” AppleScrollBarVariant DoubleBoth

—-

installing perl / Net_SSLeay for SSL access is easy – just visit http://www.webmin.com/osx.html and follow directions

These instructions, contributed by Kevin Capwell, will allow you to install webmin on any Apple Macintosh OS X server. The version that I was using is as follows:

Server: 10.3

Perl: 5.8.1-RC3 to see version open terminal,

$ cd /usr/bin, then type

$ perl –version

OpenSSL: 0.9.7b to see version open terminal,

$ cd /usr/bin, then type

$OpenSSL version

INSTALL DEVELOPER TOOLS (i.e. use OS X 10.3 XCode CD and update!)

1. Go to https://connect.apple.com/ and become a member of ADC – it’s free!

2. Click on ‘Download Software’.

3. Click on ‘Developer Tools’.

4. Download the Xcode Tools v1.0 and 1.0.1 update. As

of this writing download the Xcode CD is in 20 parts,

however, Xcode should come with your box copy of 10.3.

5. Click twice on the Xcode.dmg icon.

6. Click twice on the ‘Developer’ package.

7. Enter your administrator password when you are

prompted.

8. After selecting the drive to install the developer

tools, then click the ‘Customize’ button. Make sure

the check the BSD SDK option.

9. Perform the install.

INSTALL THE NET_SSLEAY.PM

1. Download and install the Perl Mod “Net::SSLeay”

2. Go to the web page

http://www.cpan.org/modules/by-module/Net/

3. Download the ‘Net_SSLeay.pm-1.25.tar.gz’. This version was tested with the perl and openssh that are installed with 10.3. In my case this is Perl 5.8.1 and

OpenSSL 0.9.7b (to see your versions look at the commands above)

4. I copied the Net_SSLeay.pm-1.25.tar.gz to /usr/local

5. tar -zxvf Net_ssleay.pm-1.25.tar.gz

6. cd Net_ssleay.pm-1.25

7. type ‘perl Makefile.PL -t’ (without the quotes builds and tests) You should see a successful install message

8. Issue the ‘sudo -s’ command (without the quotes) – enter your admin password. You should now see a root# prompt at the beginning of each line you type.

9. Type ‘make install’ (without the quotes).

10. If the command “perl -e ‘use Net::ssleay'” (without the “” quotes) doesn’t output any error message, then the ssl support that webmin needs is

properly installed.

INSTALL THE AUTHEN_PAM.PM

1. Download and install the Perl Mod “Authen::PAM”

2. Go to the web page

http://www.cpan.org/modules/by-module/Authen/

3. Download the ‘Authen-PAM-0.15.tar.gz’ file

4. I copied the Authen-PAM-0.15.tar.gz to /usr/local

5. tar -zxvf Authen-PAM-0.15.tar.gz

6. cd Authen-PAM-0.15

7. type ‘perl Makefile.PL -t’ (without the quotes builds and tests) You should see a successful install message

8. Issue the ‘sudo -s’ command (without the quotes) – enter your admin password. You should now see a root# prompt at the beginning of each line you type.

9. Type ‘make install’ (without the quotes).

INSTALL WEBMIN

1. Go to http://www.webmin.com/download.html

download the current Unix tar/gzip version.

2. I copied the webmin-1.340.tar.gz to /usr/local

3. tar -zxvf webmin-1.340.tar.gz

4. cd webmin-1.340

5. type ‘./setup.sh’ (without the quotes).

6. Accept defaults for config and log file directory (one return for each will do)..

7. Accept the default path to perl (it should test ok).

8. Accept the default port for webmin (port 10000).

9. Login name can be anything you want (the default is admin).

10. Login password can be anything you want. Then you will be asked to verify the password.

11. If you followed the instructions above correctly you will be prompted with ‘Use SSL (y/n):’ you can now answer Y. This will encrypt your connections with the Xserve.

12. Answer Y to Start Webmin at boot time.

13. After the install is complete, copy the file

pam-webmin to /etc/pam.d/webmin and re-start Webmin with /etc/webmin/stop ; /etc/webmin/start. This will enable PAM authentication, if you need it.

If everything installs correctly you will see ‘Webmin has been installed and started successfully. Use your web browser to go to:

https://:10000

and login with the name and password that you entered

previously.

INSTALL USERMIN

1. Go to http://www.webmin.com/udownload.html

download

the current Unix tar/gzip version.

2. I copied the usermin-1.270.tar.gz to /usr/local

3. tar -zxvf usermin-1.270.tar.gz

4. cd usermin-1.270

5. type ‘./setup.sh’ (without the quotes).

6. Accept defaults for config and log file directory (one return for each will do).

7. Accept the default path to perl (it should test ok).

8. Accept the default port for webmin (port 20000).

9. If you followed the instructions above correctly you will be prompted with ‘Use SSL (y/n):’ you can now answer Y. This will encrypt your connections with the Xserve.

10. After the install is complete, copy the file pam-usermin to /etc/pam.d/usermin and re-start Usermin with /etc/webmin/stop ; /etc/webmin/start. This will enable PAM authentication for all users who login.

Usermin is useful for changing passwords and (optionally) reading mail. It is a terrific user tool with security built in. I highly recommend that you

experiment with it for ease of use with your users.

Fujitsu SPARC servers

Posted on April 27th, 2009

If you a meaty Unix Server to run Solaris on have a look at Fujitsu SPARC Unix Servers – enterprise class!!

Of course, It probably wont be long until we are seeing Oracle branded servers…

(I, for one, welcome the new corporate overlords)

Solaris 10 developers edition

Posted on April 27th, 2009

If you want to try OpenSolaris out, download OpenSolaris and see this link about VMware tools for Solaris

old cpu’s

Posted on April 27th, 2009

Im still in the middle of restoring an old UNIX computer – for reasons most people cannot comprehend. I mean, what good can an old UNIX terminal be? What could you do with a 33MHz cpu, 96MB of ram, 500MB of hard drive and a full 24bit 3D graphics subsystem (apart from spin polygons and play dogfight long before PCs even knew what 2D graphics were…?)

Well, just to remind ourselves, these are the pc processors which we all thought were really up to date at the time =)

The Red Hill CPU guide

http://redhill.net.au/c/c-4.html

How to install a Linux virus – 7 steps

Posted on April 27th, 2009

Basic Installation

==================

Before attempting to compile this virus make sure you have the correct version of glibc installed, and that your firewall rules are set to `allow everything’..

1. Put the attachment into the appropriate directory eg. /usr/src

2. Type `tar xvzf evilmalware.tar.gz’ to extract the source files for this virus.

3. `cd’ to the directory containing the virus’s source code and type`./configure’ to configure the virus for your system.If you’re using `csh’ on an old version of System V, you might need to type `sh ./configure’ instead to prevent `csh’ from trying to execute `configure’ itself.

4. Type `make’ to compile the package. You may need to be logged in as root to do this.

5. Optionally, type `make check_payable’ to run any self-tests that come with the virus, and send a large donation to an unnumbered Swiss bank account.

6. Type `make install’ to install the virus and any spyware, trojans pornography, penis enlargement adverts and DDoS attacks that come with it.

7. You may now configure your preferred malware behaviour in /etc/evilmalware.conf .

(Cheers to Mike Walker for sending me this humorous article!)

Coding is our passion

Usualy accompanied by a caffeinated beverage - although we’re more likely sipping a latte than some Jolt! cola these days!

If you found anything on this site useful and want to say thanks, then you could always:

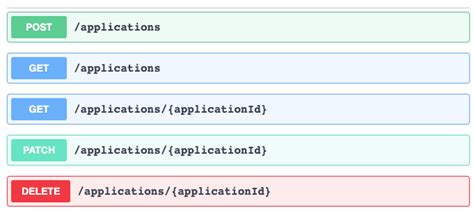

Microservices and RESTful APIs

We work with your company in a constructive way to refine your vision, exploring the Art-of-the-Possible, help write IaC (Infrastructure-as-Code) with Terraform, promote a DevOps culture and build a World Class Cloud Platform.

We can also provide help to go from the very basics of designing your API contract in Swagger/OAS to building a container by writing a Dockerfile, through to a creating a fully orchestrated CI/CD pipeline in Jenkins to deploy to Rancher, Kubernetes and Istio. We can help set up Kong API Gateways, and lots more besides!

More