SGI Indido R3000 Elan

Posted on April 17th, 2009

Upgrading an SGI Indigo R3000 – an 18 year old machine – is not without risks: the Magick Smoke already escaped once, forcing me to replace the z-buffer board. However, I have sourced an alternative, and coupled with an extra 3 GE’s, should be able to take my XS24-Z up to an Elan. Well that’s the plan.

Big thanks to Ian Mapleson, for his help sourcing the parts: http://www.sgidepot.co.uk/sgidepot/

I also have plans on trying to get a newer version of PERL on IRIX 5.3, so I can possibly run Webmin. The guys at work think im crazy. You know, they could have a point. =)

Thank you, Jamie Cameron http://www.webmin.com

watch this space!!

update: 24/04/09 – the parts have been despatched via special delivery! should arrive soon. Who put the idea in my head of getting Mozilla FireFox to run on Irix 5.3, eh, Ian – Who indeed? Damn you, there goes my social life this year. Oh well, no great loss =)

update: 04/05/09 – Finally succeeded in booting the machine, but not able to restore the IRIX 5.3 OS yet, due to SCSI CDROM trouble (SCSI bus reset). I’ve swapped drives, and cables, to no avail. Was hoping to avoid this grief, but since my last update, I’ve lost 5 servers. (Its been a tough week). The 13 year old SGI O2 which I was hoping to become the network CD share / mount point decided to blow the PSU with a tremendous explosion, the resulting electrical power surge causing a fair amount of collateral on that circuit.

However, I’m back up and running now, limping along on a spare PC server, and an Octane with a dodgy graphics card – at least one of those should help me get the CDROMs online. I might even get around to installing the upgraded hardware, soon!!

Oh, well… looks like Im going to end up installing a BOOTP server again – so look out for the post on that!

BlueOnyx

Posted on February 26th, 2009

I have decided to follow the (*) BlueOnyx distribution, as it picks up where BlueQuartz was trailing off. It is no 5106R as opposed to 5100R, and retains the sausalito / cce configuration tools.

Customary to practice, I never try a new system out on a production server, so in this case I opted to install it on a spare HDD in an older laptop while I performed some testing.

Since the machine may become disconnected from the network after the install, making it impossible to reach the familiar ‘/login’ url from another client, I have occaissionally opted to install a basic X environment on BlueQuartz, not good for production performance, but great for doing all in one testing, and development on a stand-alone server, lets say =)

There are some new features in BlueOnyx, so I couldn’t wait to tryout my fresh install. The install was great, and you now have the following options for install:

– 20GB LVM partition layout

self – manual partition config

small – 10GB LVM installation layout

It is also possible to run ‘linux rescue’ … as I found out:

in order to install X + GNOME on BlueQuartz, I did (as root)

yum update

yum grouplist

yum groupinstall “X Window System” “GNOME Desktop Environment”

this failed in BlueOnyx, something about ‘dependancy libxxxx.0.so’ so I tried X + KDE instead, which appeared to install successfully. To my horror though, I got the dreaded Kernel Panic on reboot.

The solution for me was to ‘boot linux’ to enter the rescue mode, and

# cd /mnt/sysimage/etc/selinux

# vi config

edit the line “SELINUX=enforcing” into “SELINUX=permissive”, save and

# exit

to reboot into the system. (I suggest you simply edit /etc/selinux/config after installing X, but before rebooting, to spare yourself from having to use the rescue system)

This way, instead of

…

unmounting old /dev

unmounting old /proc

unmounting old /sys

audit(1235663670.224:2): enforcing=1 old_enforcing=0 auid=4294967295 ses=4294967295

Unable to load SELinux Policy. Machine is in enforcing mode. Halting now.

Kernel panic – not syncing: Attempted to kill init!

you get

…

unmounting old /sys

INIT: version 2.86 booting

…

=D

To tweak, or not to tweak? (mysql)

Posted on January 29th, 2009

Assuming you have a cluster of mysql servers serving your application, and you have configured them to share the load, yada yada, your next step is probably going to be asking ‘how can I improve the design of database schema?’

Here are some links which cover that field (may I remind you to backup your database before starting, follow them at your own risk, and you cannot hold me responsible for any resulting issues!)

This one illustrates tweeking a Joomla CMS application on a VPS (shared hosting server):

http://www.joomlaperformance.com/articles/server_related/tweaking_mysql_server_23_16.html

This one discusses the differences between innodb and myisam db engines:

http://worthposting.wordpress.com/2008/06/22/innodb-or-myisam/

This appears to be the command to convert a myisam (the default) table to an innodb one, if you wanted to persue that, but beware the monolithic file may need more proactive maintenance and monitoring (for corruption etc, but not fragmentation issues). Again BACKUP!!

— “ALTER TABLE foo ENGINE=InnoDB”

This one discusses locking, and mysql transactions – no transactions on myisam though! (hence you might need innodb for that)

http://forums.whirlpool.net.au/forum-replies-archive.cfm/498624.html

There’s also the problem of race conditions: two select queries (two mysql sessions, webrowsers etc) return the same row, which the application wants to update. The first to ‘accepted, the second to ‘rejected’ – row or table locking will prevent them from writing the new value at the same time, but what happens if both processes complete in lockstep, first ‘accept’ ing, then ‘reject’ing the same row – if only one possible outcome was acceptable? (in other words, a row cannot be accepted after it has been rejected, and vice versa.) We could prevent accidental overwrites by increasing the criteria of the where clause:

UPDATE `orders`

SET `status`=’accepted’

— the first where clause identifies the row

WHERE `order_id`='”.$this->DB->quotestring($order_id).”

— and the second helps prevent ‘logical’ data collisions!

AND `status` != ‘rejected”;

Lastly, this one illustrates a number of different ways one could design a schema

“including discussions on schema architecture, common data access patterns, and replication/scale-out guidelines” using web ‘tagging’ as an example.

tmobile usb3g on ubuntu / eee

Posted on January 20th, 2009

Updated May 2009!

I have been informed that after upgrading the eee to Ubuntu 9.04 the usb 3g stick no longer works. Even after recompiling the drivers against the kernel, and a few other attempts to solve it, it would appear that the USB-serial driver which the stick depended on is no longer in the kernel (can anyone verify?).

Current workaround for me is to stay on Ubuntu 8.10 a little while longer, and hope the manufacturer gets the updated drivers out soon =(

—

Having just recently aquired both an Asus EEE 901 and a t-mobile 530 usb 3g broadband stick, work began on figuring out how to install.

On a stock Ubuntu (8.10) distribution, download and extract the 2 tar.gz files. These contain the v1.6 driver (as opposed to the v1.2 in Ubuntu). The README and other documentation aren’t very clear, but this is basically how I did it. They might not work for you, so don’t blame me if you end up summonning Cthulhu all over your hard drive, or anything else nasty happens.

I’ve only put them here so I can download them to my own EEE after I replace Xandros by ethernet (The eee WiFi works, but the Xandros WPA is broken) and obviously, I cant use the usb3g yet…. 😉

# this disables the old option ZeroCD driver

echo “blacklist option” >> /etc/modprobe.d/blacklist

tar xzfmv hso-1.6.tar.gz

tar xzfmv udev.tar.gz

# now cd into each directory and

make install

reboot

I found that the system can crash if the stick is removed.

These are the files from the CD rom that came with the stick, I shall post the md5sums but they matched those available to download elsewhere, so you might as well use the CD if you have it.

Linux drivers:

hso-1.6.tar.gz: 30.05 KB

udev.tar.gz: 27.19 KB

EEE drivers (for Xandros?) – not required for Ubuntu

hso_connect.sh: 7.14 KB

hso-modules-2.6.21.4-eeepc_1.4-4+5_i386.deb: 30.13 KB

hso-udev_1.4-4_i386.deb: 21.03 KB

Edit: Sorry folks, looks like I’d messed up the urls, I’ve patched them now! =)

Using the DCMD distributed shell to manage your cluster

Posted on January 15th, 2009

‘dcmd’ from sourceforge.net provides a suite of commands that can be used, such as dssh. once you have given it a list of user@hostnames, it will send the same command to each one of your desired servers, and echo the response from each one.

for example, `dssh uptime` would be able to respond with all the uptimes of the linux / unix servers in your network or server farm. You could extend this to monitor for failed services, and attempt to restart them, for example…

This article explains everything:

http://www.samag.com/documents/s=8892/sam0310e/sam0310e.htm

Create a new Samba user profile

Posted on January 14th, 2009

Just a quick one tonight – how to quickly add a new user profile in (Ubuntu) linux and export their home profile directory as a Samba share. (You need to add them twice, once for linux and once for Samba.)

# useradd -d /home/foo -m foo

# passwd foo

Enter new UNIX password:

Retype new UNIX password:

passwd: password updated successfully

# smbpasswd -a foo

New SMB password:

Retype new SMB password:

Added user foo.

a couple of things to note – you could use ‘adduser’ instead of useradd, but that will prompt you for the users info, which you may find you prefer (if in a non-scripted evironment)

also ‘smbpasswd foo’ might be required if, after running the command to add a new Samba user, it creates that user with a locked account – this should allow you to set the password and unlock them.

now connect to \\servername\username

Quick troubleshooting steps:

check all spellings in the /etc/samba/smb.conf

check spellings on windows client

read the comments in the smb.conf file! (especially the testparm hint!!)

Of course, I’m assuming you already know how to sudo apt-get install samba, configure your ‘[homes] directory exports in the smb.conf file, and restart samba ‘/etc/init.d/samba restart’. If not I got all I needed from:

google books (Hacking Ubuntu

By Neal Krawetz)

MySQL multimaster cluster

Posted on January 13th, 2009

I’ve been looking at ways of clustering my MySQL servers, to provide failover capabilities and also to distribute the load coming in from a farm of web servers.

There are many ways of loadbalancing, from DNS round-robin through Apache ServerMap directives and so on. Once you get it sorted, and have implemented some sort of session system so a visitor can be serverd by any web node – how do you split the load on the database servers yet maintain consistency?

If you do not have machines powerful enough to look at the MySQL Cluster methods (from 5.0 upwards) then you will probably have looked into replication. But now you have another problem…. the write-to-master, read-from-slave scenario.

OK, it isn’t a problem by itself. But if you run an application like wordpress, for example, which keeps the server address in the database – you now have the choice of modifying the application to be aware of more than one server (the master for writes, the slaves for reads) -or- modify each database so it has a different value.

This could be very tricky in a replicated slave environment, as changes there will be overwritten again by the master, and lost?!

So, if you don’t want to have a major headache rewriting your application, or customising your databases, have a look at multimaster replication.

For my example, it involves running a mysql server instance on each webserver – and setting the ip address in the database to 127.0.0.1 instead of using a separate real ip each time. This may not be applicable to you, but it means for me, I can run any number of nodes, each with their own dedicated MySQL datasource, which will be identical across all the nodes. That’s it!

http://www.onlamp.com/pub/a/onlamp/2006/04/20/advanced-mysql-replication.html

* again thanks to Matt for pointing out that replication traffic could spiral exponentially, with every additional node, if packets are sent to unicast addresses. I will try and configure each node to send updates to the multicast address, so each SQL SELECT, UPDATE, and so on is replicated to the network only once.

backup with rsync

Posted on January 10th, 2009

OK, so we’re starting to build our cluster now, which is all well and good, except we haven’t discussed backups yet.

We are looking at having multiple physical servers share the traffic (Load balancing) and as they are online, will serve as hot replacements in the event of a machine or daemon process dying (failover). We could replicate our database, to reduce single point of failure – but this doesn’t safeguard against a user ‘DROP’ing our tables and data. We might use RAID, so that if one hard drive fails, the data is not lost – but again this doesnt stop an errant “rm -rf /*” (don’t try this at home kids!)

So, we copy our data from one machine to another, from where it can be written to usb disk, tape drive, dvd, usb flash, whatever… and possibly exported back to the network as a read only share.

rsync -avz -e ‘ssh -l username’ /local/source/dir/ remotefoo:/destination/dir

I like to use the command like this, so I know I’m using ssh to copy, with compression. see the man rsync page for more options.

Ubuntu raid – postinstallation

Posted on January 10th, 2009

https://wiki.ubuntu.com/Raid has a simple script for creating a RAID array which you can adapt to your specific requirements – In my case I used it to add 2 shiny new 640GB drives to my Ubuntu machine after installing a copy of Ubuntu Desktop (I didnt bother reinstall the OS, I just added it as a data store. If my OS ever self-destructs, I can just boot off my USB drive and recover my data from there – although you may need to “sudo apt-get install mdadm”.

locate a package for Ubuntu

Posted on January 9th, 2009

OK I admin Im being lazy and didnt want to follow on from my last post by downloading and installing UltraMonkey by hand. But since I was away from my Ubuntu machine, I couldnt fire up Synaptic to see if there was a Ubuntu release of the package I wanted.

As discovered by a colleage of mine, http://packages.ubuntu.com/ is the answer – allows you to search for what you want via the web instead. Cheers, Matt! =)

Of course the package you want might not be listed, so perhaps it is available from another repository? Then you might find you need to edit your sources.list file.

Coding is our passion

Usualy accompanied by a caffeinated beverage - although we’re more likely sipping a latte than some Jolt! cola these days!

If you found anything on this site useful and want to say thanks, then you could always:

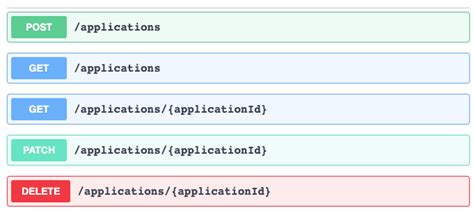

Microservices and RESTful APIs

We work with your company in a constructive way to refine your vision, exploring the Art-of-the-Possible, help write IaC (Infrastructure-as-Code) with Terraform, promote a DevOps culture and build a World Class Cloud Platform.

We can also provide help to go from the very basics of designing your API contract in Swagger/OAS to building a container by writing a Dockerfile, through to a creating a fully orchestrated CI/CD pipeline in Jenkins to deploy to Rancher, Kubernetes and Istio. We can help set up Kong API Gateways, and lots more besides!

More